Xbox Kinect system helps autistic children play music. Skeleton Tracking with the Kinect - Learning. This tutorial will explain how to track human skeletons using the Kinect.

The OpenNI library can identify the position of key joints on the human body such as the hands, elbows, knees, head and so on. These points form a representation we call the 'skeleton'. Enabling Skeleton Tracking Let us start with the code that we had by the end of the tutorial called Drawing Depth with the Kinect: import SimpleOpenNI.*; SimpleOpenNI context; void setup(){ // instantiate a new context context = new SimpleOpenNI(this); // enable depth image generation context.enableDepth(); // create a window the size of the depth information size(context.depthWidth(), context.depthHeight()); } void draw(){ // update the camera context.update(); // draw depth image image(context.depthImage(),0,0); } First we must tell the OpenNI library to determine joint positions by enabling the skeleton tracking functionality . // enable skeleton generation for all joints context.enableUser(SimpleOpenNI.SKEL_PROFILE_ALL);

Dale Phurrough. OpenNI access for your depth sensors like Microsoft Kinect in Cycling 74′s Max jit.openni is a rich Max Jitter external which allows usage of sensors like the Microsoft Kinect and ASUS X-tion PRO in your patchers.

It exposes much of the functionality from sensors like the Kinect in an easy to use native enhancement for the Cycling 74 Max application platform for Windows and Mac OSx computers. It has support for: Licensing jit.openni is free software: you can redistribute it and/or modify it under the terms of the GNU General Public License as published by the Free Software Foundation, either version 3 of the License, or (at your option) any later version. Documentation Full wiki documentation and installation instructions are available on GitHub. Downloads. New Kinect Gets Closer to Your Body [Videos, Links] The new, svelte-looking Kinect.

![New Kinect Gets Closer to Your Body [Videos, Links]](http://cdn.pearltrees.com/s/pic/th/kinect-closer-videos-links-58144800)

It’s not that it looks better, though, that matters: it’s that it sees better. Courtesy Microsoft. It’s a new world for media artists, one in which we look to the latest game console news because it impacts our art-making tools. And so it is that, along with a new Xbox, Microsoft has a new Kinect. Dale Phurrough. Cycling 74 Max external using Microsoft Kinect SDK dp.kinect is an external which can be used within the Cycling ’74 Max development environment to control and receive data from your Microsoft Kinect.

Setup and usage docs are available at It is based on the official Microsoft Kinect platform. You will need at least v1.5.2 of the Kinect runtime/drivers to use this external.Extensive features including: face tracking, sound tracking, speech recognition, skeletal tracking, depth images, color images, point clouds, accelerometer, multiple Kinects, and more…It was primarily developed and tested against Max 6.x for 32 and 64-bit platforms.

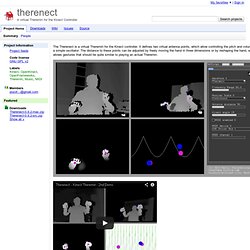

It has only been casually tested against Max 5.1.9.There are the same inlets and outlets as compared to my other external jit.openni. Known Issues. Kinect2Scratch 2.5. Teaching kids to program using Scratch and the Kinect - Stephen Howell [YouTube] Therenect - A virtual Theremin for the Kinect Controller. The Therenect is a virtual Theremin for the Kinect controller.

It defines two virtual antenna points, which allow controlling the pitch and volume of a simple oscillator. The distance to these points can be adjusted by freely moving the hand in three dimensions or by reshaping the hand, which allows gestures that should be quite similar to playing an actual Theremin. This video was recorded prior to this release, an updated video introducing the improved features of the current version will follow soon. Configuration Oscillator: Theremin, Sinewave, Sawtooth, Squarewave Tonality: continuos mode or Chromatic, Ionian and Pentatonic scales MIDI: optionally send MIDI note on/off events to the selected device & channel Kinect: adjust the sensor camera angle AcknowledgmentsThis application has been created by Martin Kaltenbrunner at the Interface Culture Lab. Bikinect - Kinect Tool Suite for easy development & live perfomances. The V Motion Project.

Tod Machover & Dan Ellsey: Releasing the music in your head. ViiM SDK for 3D sensors like Kinect. CAN Kinect Physics Tutorial. KinectA. Kinect Physics Tutorial for Processing. Digital Puppetry: Tryplex Makes Kinect Skeleton Tracking Easier, for Free, with Quartz Composer. Via the experimentation of the movement+music+visual collective in Ireland, we see another great, free tool built on Apple’s Quartz Composer developer tool. Tryplex is a set of macro patches, all open source, that makes Kinect skeleton tracking easier. There’s even a puppet tool and skeleton recorder. Aesthetically, the video below is all stick-figure stuff (which is the only reason I can imagine why it’s getting any dislikes on YouTube). But it shouldn’t take much imagination to see the potential here. For one example, see the video at top — something that must make Terry Gilliam get hot and bothered (or wish he had been born later). Features: Synapse support, for interoperability with other Mac and Windows visual apps and depth image visibilityOSC messagesLoads of examplesPuppet toolSkeleton recording3D sculpting and modeling ideas Have a look:

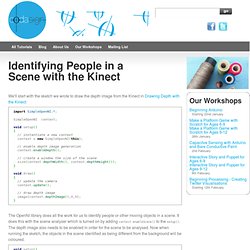

Identifying People in a Scene with the Kinect - Learning. We'll start with the sketch we wrote to draw the depth image from the Kinect in Drawing Depth with the Kinect: import SimpleOpenNI.*; SimpleOpenNI context; void setup(){ // instantiate a new context context = new SimpleOpenNI(this); // enable depth image generation context.enableDepth(); // create a window the size of the scene size(context.depthWidth(), context.depthHeight()); } void draw(){ // update the camera context.update(); // draw depth image image(context.depthImage(),0,0); } The OpenNI library does all the work for us to identify people or other moving objects in a scene.

Deece Records: Kinect. SimpleKinect simpleKinect is an interface application for sending data from the Microsoft Kinect to any OSC-enabled application.

The application attempts to improve upon similar software by offering more openni features and more user control. The interface was built with Processing, utilizing the libraries: controlP5, oscP5, and simple-openni. Because I used open-source tools, and because the nature of the project is to stimulate creativity, simpleKinect is free to use. simpleKinect Features Auto-calibration.

Installation - simple-openni - How to install. - A simple OpenNI wrapper for processing. Getting Started with Kinect and Processing. So, you want to use the Kinect in Processing.

Great. This page will serve to document the current state of my Processing Kinect library, with some tips and info. The current state of affairs Since the kinect launched in November 2010, there have been several models released. Here's a quick list of what is out there and what is supported in Processing for Mac OS X. Commencer facilement le tracking de geste avec une Kinect ! [hacking]

Home - Kinectar. Animation Test: Kinect + After Effects! Kinect for Windows. N1ckFG/KinectToPin. A Revamped Collaborative Kinect Puppet. Kinect MoCap Animation in After Effects — Part 2: Motion Capture with KinectToPin. This tutorial is now obsolete.

Check out the new KinectToPin website for the latest version of the software and how to use it — it’s dramatically easier now. Welcome back. You have your USB adapter and you’ve installed all the software I linked in Part I, right? Now it’s time to get KinectToPin up and running. You don’t have to install it, just unzip it and drop its folder inside the Processing library folder, which on a Windows machine is usually just a folder in My Documents called “Processing.” Processing sketchbook folders have to match the names of the programs, or sketches, they contain, or you won’t be able to load them. Tip: SimpleOpenNI installs the same way as KinectToPin, but you need to put its folder inside Processing => libraries instead of inside just the Processing folder.

And now you’re ready to go! Now go File -> Sketchbook -> KinectToPin and hit the play button. You can press cam to check and make sure your Kinect is seeing things. Kinect MoCap Animation in After Effects — Part 1: Getting Started. This tutorial is now obsolete.

Check out the new KinectToPin website for the latest version of the software and how to use it — it’s dramatically easier now. Hello, I’m Victoria Nece. I’m a documentary animator, and today I’m going to show you how to use your Kinect to animate a digital puppet like this one in After Effects. If you have a Kinect that came with your Xbox, the first thing you’re going to need to do is buy an adapter so you can plug it into your computer’s USB port. You don’t need to get the official Microsoft one — I got a knockoff version from Amazon for six bucks and it’s working just fine. Next you’re going to need to install a ton of different software. Here’s a quick overview of how it’s all going to work. Kinect / Kinect. La Kinect est une caméra qui permet de capter trois types d'images différentes, une image de profondeur, une image RGB et une image infrarouge: Images de profondeur, RGB et infrarouge L'image de profondeur peut-être utilisée pour assembler des maillages 3D en temps réel.

L'image RGB peut-être mappée à ce maillage 3D ou le maillage peut-être déformé pour créer des effets intéressants: En appliquant des modules d'analyses à l'image de profondeur, il est possible de poursuivre plusieurs interacteurs et d'interpréter leurs mouvements: Modules d'analyse de la scène, squelettique et gestuel L'utilisation de la Kinect nécessite un pilote. OpenNI: réalisé par les concepteurs de la Kinect et très riche en fonctionnalités libfreenect: réalisé par la communauté et très élémentaire Parfois il est nécessaire de télécharger et d'installer les pilotes et parfois ils sont déjà inclus et compilés.