Google Colab: how to read data from my google drive? 4 Awesome Ways Of Loading ML Data In Google Colab. Google Colaboratory or Colab has been one of the favorite development environment for ML beginners as well as researchers.

It is a cloud-based Jupyter notebook do there have to be some awesome ways of loading machine learning data right from your local machine to the Cloud. We’ll be discussing some methods which would avoid you to click the “Upload” button directly! If you are working on a project which has its own dataset like any object detection model, classification models etc. then we will like to pull the dataset from GitHub directly. If the dataset is in an archive ( .zip or .tar ), we can get it in our Colab notebook, Open up the GitHub repo and copy the URL from the “View Raw” text. Note: The URL which you copy should end with the ? Alongside, you can clone the entire repo always, ! 2. Persisting data in Google Colaboratory.

How to save your data you've already loaded and processed in Google Colab notebook so you don't have to reload it everytime? Pytorch - Speed up datasets loading on Google Colab. Loading image data from google drive to google colab using Pytorch’s dataloader. Assuming you already have dataset in your google-drive, you can run the following command in google colab notebook to mount google drive.

You will need to authenticate this step by clicking on the prompted link. from google.colab import drivedrive.mount('/content/gdrive') You can see the content of dataset(present in google drive) by running the following terminal-like command in your notebook. PATH_OF_DATA= '/content/gdrive/"My Drive"/name_of_dataset_folder'! Ls {PATH_OF_DATA} Please note the “ ” around My Drive. Now let’s see how we can use Pytorch’s dataloader module to read images of different class. Note that there is no “ ” around My Drive. Bonus : If you want to see the images of your dataset in Notebook, run the following command. import matplotlib.pyplot as plt%matplotlib inline# helper function to un-normalize and display an imagedef imshow(img): img = img / 2 + 0.5 # unnormalize plt.imshow(np.transpose(img, (1, 2, 0))) # convert from Tensor image.

Speed up your image training on Google Colab - Data Driven Investor - Medium. Getting a factor 20 speedup in training a cats-vs-dogs classifier for free!

In one of my posts, I gave a detailed walkthrough on how to set up an environment for deep learning experiments using GoogleColab and GoogleDrive. At that time, I was working on univariate timeseries. The total size of my training data was very small, so I didn’t realize this one specific issue with my setup: It takes forever to copy files from Drive to Colab. While this is no problem when dealing with very small datasets, it’s very annoying when facing larger data, for example for image classification. Training times range from “very long” to “aborted because of Drive timeout”. There is however a workaround, which I’m describing in this post. I’ll be testing the performance by loading the dataset into a fastai databunch and running the learning rate finder (lr_find). The image above shows one of the few successful attempts.

Handling lots of small files is slow. Zip_path = base/’size_test/cats_dogs.zip’! 3 Essential Google Colaboratory Tips & Tricks. Like many of you, I have been very excited by Google's Colaboratory project.

While it isn't exactly new, its recent public release has generated a lot of renewed interest in the collaborative platform. For those that don't know, Google Colaboratory is... [...] a Google research project created to help disseminate machine learning education and research. It's a Jupyter notebook environment that requires no setup to use and runs entirely in the cloud. Here are a few simple tips for making better use of Colab's capabilities while you play around with it. 1. Select "Runtime," "Change runtime type," and this is the pop-up you see: Ensure "Hardware accelerator" is set to GPU (the default is CPU). Hidden features of Google Colaboratory - Keshav Aggarwal - Medium. When working with deep learning some of the major stoppers are training data and computation resources.

Google provides free solution for computation resources with Colaboratory, Google’s free environment which have GPU. Colaboratory provides online jupyter notebook where you can select GPU as back-end server. You can read more about the Colab here. Here I will add some of the features which might be helpful for you in some scenarios. Google Colaboratory Cheat Sheet - Rahul Metangale - Medium. Google colaboratory recently started offering free GPU.

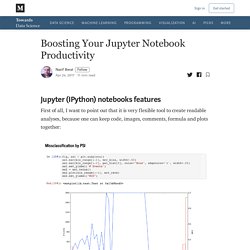

Colaboratory has become my goto tool to develop and test deep learning models. I usually develop and test my models on Google Colaboratory before doing full training and testing on AWS GPU instance. When i was exploring this tool initially i had many questions like how do i download my project from github to colob? Boosting Your Jupyter Notebook Productivity.

Jupyter is very extensible, supports other programming languages, easily hosted on almost any server — you just only need to have ssh or http access to a server.

And it is completely free. List of hotkeys is shown in Help > Keyboard Shortcuts (list is extended from time to time, so don’t hesitate to look at it again). This gives an idea of how you’re expected to interact with notebook. If you’re using notebook constantly, you’ll of course learn most of the list. In particular: Esc + F Find and replace to search only over the code, not outputsEsc + O Toggle cell outputYou can select several cells in a row and delete / copy / cut / paste them. Simplest way is to share notebook file (.ipynb), but not everyone is using notebooks, so the options are There are many plotting options: Magics are turning simple python into magical python. In [1]: # list available python magics%lsmagic Out[1]: You can manage environment variables of your notebook without restarting the jupyter server process.