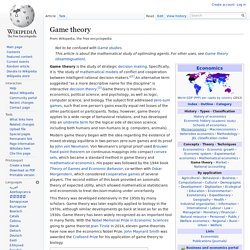

Wikipedia - Game theory. Game theory is the study of strategic decision making.

Specifically, it is "the study of mathematical models of conflict and cooperation between intelligent rational decision-makers. "[1] An alternative term suggested "as a more descriptive name for the discipline" is interactive decision theory.[2] Game theory is mainly used in economics, political science, and psychology, as well as logic, computer science, and biology. The subject first addressed zero-sum games, such that one person's gains exactly equal net losses of the other participant or participants. Today, however, game theory applies to a wide range of behavioral relations, and has developed into an umbrella term for the logical side of decision science, including both humans and non-humans (e.g. computers, animals).

Modern game theory began with the idea regarding the existence of mixed-strategy equilibria in two-person zero-sum games and its proof by John von Neumann.

Game theory. Game Theory. First published Sat Jan 25, 1997; substantive revision Wed May 5, 2010 Game theory is the study of the ways in which strategic interactions among economic agents produce outcomes with respect to the preferences (or utilities) of those agents, where the outcomes in question might have been intended by none of the agents.

The meaning of this statement will not be clear to the non-expert until each of the italicized words and phrases has been explained and featured in some examples. Doing this will be the main business of this article. First, however, we provide some historical and philosophical context in order to motivate the reader for the technical work ahead. 1.

The mathematical theory of games was invented by John von Neumann and Oskar Morgenstern (1944). Despite the fact that game theory has been rendered mathematically and logically systematic only since 1944, game-theoretic insights can be found among commentators going back to ancient times. 2. 2.1 Utility. Normal-form game. In static games of complete, perfect information, a normal-form representation of a game is a specification of players' strategy spaces and payoff functions.

A strategy space for a player is the set of all strategies available to that player, whereas a strategy is a complete plan of action for every stage of the game, regardless of whether that stage actually arises in play. A payoff function for a player is a mapping from the cross-product of players' strategy spaces to that player's set of payoffs (normally the set of real numbers, where the number represents a cardinal or ordinal utility—often cardinal in the normal-form representation) of a player, i.e. the payoff function of a player takes as its input a strategy profile (that is a specification of strategies for every player) and yields a representation of payoff as its output. An example[edit] Other representations[edit] Uses of normal form[edit] Dominated strategies[edit] Fictitious play.

In game theory, fictitious play is a learning rule first introduced by G.W.

Brown (1951). In it, each player presumes that the opponents are playing stationary (possibly mixed) strategies. At each round, each player thus best responds to the empirical frequency of play of their opponent. Such a method is of course adequate if the opponent indeed uses a stationary strategy, while it is flawed if the opponent's strategy is non stationary. The opponent's strategy may for example be conditioned on the fictitious player's last move. History[edit] Brown first introduced fictitious play as an explanation for Nash equilibrium play. Convergence properties[edit] In fictitious play strict Nash equilibria are absorbing states. Therefore, the interesting question is, under what circumstances does fictitious play converge? Fictitious play does not always converge, however. Minimax. Minimax (sometimes MinMax or MM[1]) is a decision rule used in decision theory, game theory, statistics and philosophy for minimizing the possible loss for a worst case (maximum loss) scenario.

Originally formulated for two-player zero-sum game theory, covering both the cases where players take alternate moves and those where they make simultaneous moves, it has also been extended to more complex games and to general decision making in the presence of uncertainty. Extensive-form game. An extensive-form game is a specification of a game in game theory, allowing (as the name suggests) explicit representation of a number of important aspects, like the sequencing of players' possible moves, their choices at every decision point, the (possibly imperfect) information each player has about the other player's moves when he makes a decision, and his payoffs for all possible game outcomes.

Extensive-form games also allow representation of incomplete information in the form of chance events encoded as "moves by nature". Finite extensive-form games[edit] Some authors, particularly in introductory textbooks, initially define the extensive-form game as being just a game tree with payoffs (no imperfect or incomplete information), and add the other elements in subsequent chapters as refinements. Whereas the rest of this article follows this gentle approach with motivating examples, we present upfront the finite extensive-form games as (ultimately) constructed here.

Where: Markov decision process. Markov decision processes (MDPs), named after Andrey Markov, provide a mathematical framework for modeling decision making in situations where outcomes are partly random and partly under the control of a decision maker.

MDPs are useful for studying a wide range of optimization problems solved via dynamic programming and reinforcement learning. MDPs were known at least as early as the 1950s (cf. Bellman 1957). A core body of research on Markov decision processes resulted from Ronald A. Howard's book published in 1960, Dynamic Programming and Markov Processes. More precisely, a Markov Decision Process is a discrete time stochastic control process. . , and the decision maker may choose any action that is available in state . Nash equilibrium. In game theory, the Nash equilibrium is a solution concept of a non-cooperative game involving two or more players, in which each player is assumed to know the equilibrium strategies of the other players, and no player has anything to gain by changing only their own strategy.[1] If each player has chosen a strategy and no player can benefit by changing strategies while the other players keep theirs unchanged, then the current set of strategy choices and the corresponding payoffs constitutes a Nash equilibrium.

The reality of the Nash equilibrium of a game can be tested using experimental economics method. Stated simply, Amy and Will are in Nash equilibrium if Amy is making the best decision she can, taking into account Will's decision while Will's decision remains unchanged, and Will is making the best decision he can, taking into account Amy's decision while Amy's decision remains unchanged. Applications[edit] History[edit] The Nash equilibrium was named after John Forbes Nash, Jr. Let . Bayesian game. In game theory, a Bayesian game is one in which information about characteristics of the other players (i.e. payoffs) is incomplete. Following John C. Shapley Value.

Game Theory & Statistics. Choice Theory. Gerymandering. Math.