Butterworth / Bessel / Chebyshev Filters. Davidcairns/MediaPlayerDemo. Fire. I wasn't really sure weather to put this one under Graphics or Models.

In the end, I chose Models because I felt like it. Fire is an extremely hard thing to simulate accurately on a computer because it is a huge monster 3D fluid dynamic system. To produce an accurate simulation would require this much (arms stretched wide) computing power, and would certainally not be possible in real time. Moreover, I haven't the foggiest idea how to do it. It is for these reasons that I am not going to describe a super accurate model. Most of you will have seen the 2D fire effect that was shown off in many demos, and is still lingering around. You can download the fire I wrote on the left. Please note that, being a PC programmer, I tend to make this kind of thing in VGA 320x200 screen mode with 256 colours. The idea behind the origional fire effect was simple. Fire. From iPod Library to PCM Samples in Far Fewer Steps Than Were Previously Necessary. In a July blog entry, I showed a gruesome technique for getting raw PCM samples of audio from your iPod library, by means of an easily-overlooked metadata attribute in the Media Library framework, along with the export functionality of AV Foundation.

The AV Foundation stuff was the gruesome part — with no direct means for sample-level access to the song “asset”, it required an intermedia export to .m4a, which was a lossy re-encode if the source was of a different format (like MP3), and then a subsequent conversion to PCM with Core Audio. Please feel free to forget all about that approach… except for the Core Media timescale stuff, which you’ll surely see again before too long. iOS 4.1 added a number of new classes to AV Foundation (indeed, these were among the most significant 4.1 API diffs) to provide an API for sample-level access to media.

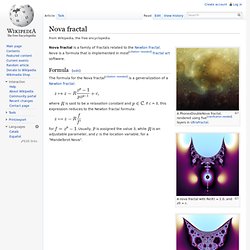

The essential classes are AVAssetReader and AVAssetWriter. Using these, we can dramatically simplify and improve the iPod converter. Ios - Post-processing on audio from the iPhone user's music library? Ios - What's the easiest way to resize/optimize an image size with the iPhone SDK? iPhone Tutorials. Language agnostic - 2D smoke/fire/mist algorithm. Nova fractal. A nova fractal with Re(R) = 1.0, and z0 = c.

A nova fractal with Re(R) = 2.0, and z0 = c. A nova fractal with Re(R) = 3.0, and z0 = c. A 129804.49 times magnification at the point (-0.43608549343268, -0.102470623996602) on the novaMandelbrot fractal with start value , exponent and relaxation. OpenGL ES 2.0 for iPhone Tutorial. If you're new here, you may want to subscribe to my RSS feed or follow me on Twitter.

Thanks for visiting! Learn how to use OpenGL ES 2.0 from the ground up! OpenGL ES is the lowest-level API that you use to program 2D and 3D graphics on the iPhone. If you’ve used other framework such as Cocos2D, Sparrow, Corona, or Unity, these are all built on top of OpenGL! OpenGL ES 2.0 for iPhone Tutorial Part 2: Textures. If you're new here, you may want to subscribe to my RSS feed or follow me on Twitter.

Thanks for visiting! Learn how to add textures using OpenGL ES 2.0! In this tutorial series, our aim is to take the mystery and difficulty out of OpenGL ES 2.0, by giving you hands-on experience using it from the ground up! In the first part of the series, we covered the basics of initializing OpenGL, creating some simple vertex and fragment shaders, and presenting a simple rotating cube to the screen. In this part of the tutorial series, we’re going to take things to the next level by adding some textures to our cube! Caveat: I am not an Open GL expert! Pulse-code modulation. Linear pulse-code modulation (LPCM) is a specific type of PCM where the quantization levels are linearly uniform.[5] This is in contrast to PCM using, for instance, A-law algorithm or μ-law algorithm where quantization levels vary as a function of amplitude.

Though PCM is a more general term, it is often used to describe data encoded as LPCM. PCM streams have two basic properties that determine their fidelity to the original analog signal: the sampling rate, which is the number of times per second that samples are taken; and the bit depth, which determines the number of possible digital values that each sample can take. History[edit] In 1920, the Bartlane cable picture transmission system, named for its inventors Harry G. Bartholomew and Maynard D. In 1926, Paul M. The first transmission of speech by digital techniques was the SIGSALY encryption equipment used for high-level Allied communications during World War II.

Implementations[edit] Modulation[edit] Demodulation[edit] ). Theory - How to program a fractal?