Ubuntu + Kinect + OpenNI + PrimeSense - MitchTech. Getting the OpenNI and PrimeSense drivers working on Ubuntu Here’s an overview of the process to get the OpenNI and PrimeSense drivers working with the Kinect and Ubuntu.

Begin by installing some dependencies: sudo apt-get install git-core cmake freeglut3-dev pkg-config build-essential libxmu-dev libxi-dev libusb-1.0-0-dev doxygen graphviz mono-complete Make a directory to store the build, then clone the OpenNI source from Github. mkdir ~/kinectcd ~/kinectgit clone Run the RedistMaker script in the Platform/Linux folder and install the output binaries. Rbg's home page. I finished my Ph.D. in computer vision at The University of Chicago under the supervision of Pedro Felzenszwalb in April 2012.

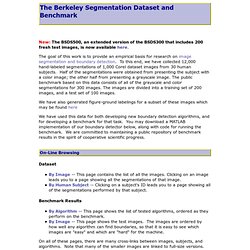

Then, I spent two unbelievably wonderful years as a postdoc at UC Berkeley under Jitendra Malik. Now, I'm a Researcher at Microsoft Research in Redmond, WA. My main research interests are in computer vision, AI, and machine learning. I'm particularly focused on building models for object detection and recognition. These models aim to incorporate the "right" biases so that machine learning algorithms can understand image content from moderate to large-scale datasets. During my Ph.D., I spent time as a research intern at Microsoft Research Cambridge, UK working on human pose estimation from (Kinect) depth images. From 3D Scene Geometry to Human Workspace. Www.umiacs.umd.edu/~mingyliu/papers/liu_cvpr2010.pdf. The Berkeley Segmentation Dataset and Benchmark. New: The BSDS500, an extended version of the BSDS300 that includes 200 fresh test images, is now available here.

The goal of this work is to provide an empirical basis for research on image segmentation and boundary detection. To this end, we have collected 12,000 hand-labeled segmentations of 1,000 Corel dataset images from 30 human subjects. Half of the segmentations were obtained from presenting the subject with a color image; the other half from presenting a grayscale image.

The public benchmark based on this data consists of all of the grayscale and color segmentations for 300 images. The images are divided into a training set of 200 images, and a test set of 100 images. We have also generated figure-ground labelings for a subset of these images which may be found here We have used this data for both developing new boundary detection algorithms, and for developing a benchmark for that task. Dataset. Free your Camera: 3D Indoor Scene Understanding from Arbitrary Camera Motion. 24th British Machine Vision Conference (BMVC) Axel Furlan, Stephen Miller, Domenico G.

Sorrenti, Fei-Fei Li, Silvio Savarese Abstract Many works have been presented for indoor scene understanding, yet few of them combine structural reasoning with full motion estimation in a real-time oriented approach. David Fouhey. Data-Driven 3D Primitives. The Important Stuff Downloads: Code Natural World Data (906MB .zip) (From Hays and Efros, CVPR 2008) Contact: David Fouhey (please note that we cannot provide support) This is a reference version of the training code.

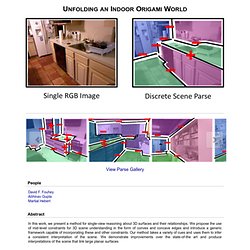

It also contains a dataset setup script that will download the NYU v2 dataset and prepare it correctly. Setup Download the code and the data and unpack each. Acknowledgments The data used by default is the NYU Depth v2 Dataset available here and collected by Nathan Silberman, Pushmeet Kohli, Derek Hoiem and Rob Fergus. This code builds heavily on: Unfolding an Indoor Origami World. Carousel slider View Parse Gallery People Abstract In this work, we present a method for single-view reasoning about 3D surfaces and their relationships.

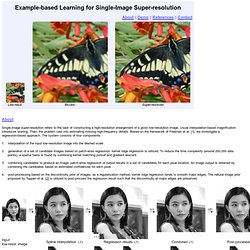

We propose the use of mid-level constraints for 3D scene understanding in the form of convex and concave edges and introduce a generic framework capable of incorporating these and other constraints. Silvio Savarese - Home page. Www.di.ens.fr/willow/research/seeing3Dchairs/data/cvpr14_Seeing3DChairs.pdf. Seeing 3D chairs: exemplar part-based 2D-3D alignment using a large dataset of CAD models. Free your Camera: 3D Indoor Scene Understanding from Arbitrary Camera Motion. Learning image super-resolution. About.

Subhransu's Homepage. Poselets. Abstract We address the classic problems of detection and segmentation using a part based detector that operates on a novel part, which we refer to as a poselet.

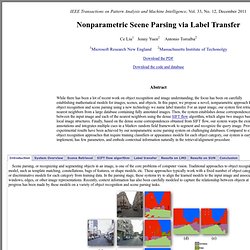

Poselets are tightly clustered in both appearance space (and thus are easy to detect) as well as in configuration space (and thus are helpful for localization and segmentation). We demonstrate poselets are effective for detection, pose extraction, segmentation, action/pose estimation and attribute classification. Theaffordances.net. Nonparametric Scene Parsing via Label Transfer. IEEE Transactions on Pattern Analysis and Machine Intelligence, Vol. 33, No. 12, December 2011 Scene parsing, or recognizing and segmenting objects in an image, is one of the core problems of computer vision.

Traditional approaches to object recognition begin by specifying an object model, such as template matching, constellations, bags of features, or shape models, etc. These approaches typically work with a fixed number of object categories and require training generative or discriminative models for each category from training data. Www.csc.kth.se/cvap/cvg/rg/materials/magnus_003_slides.pdf.

If you are starting your research in the field of object recognition / object detection... If you are an aspiring computer vision graduate student and hope to one day shatter the boundaries of machine perception, a good place to start is on the shoulders of giants.

A key ingredient to successful object recognition research is a powerful codebase, which you will hopefully one day outgrow and/or extend. The single best place to get starter-code is at the following work, titled: Why not start with some easy-to-understand MATLAB code so you can starting advancing your research this year, not this decade!?! Also, if you are able to build on this work, you will have an easy time publishing object detection papers that will actually be treated seriously by contemporary vision researchers. So my advice is to get voc-release-3.1, and read the following PAMI paper. Home Page of Iasonas Kokkinos. Combining Low- and High- level vision I am working on learning, segmenting and parsing with deformable object models.

The main priority of my research is to develop models that allow to combine low-level processing (e.g. boundary detection, segmentation) with high-level information, e.g. model-based guidance. Object Parsing We address the problem of detecting an object and its components by recursively composing them from image observations. We develop an efficient inference method that addresses the computational complexity of the problem by relying on A*. We use our hierarchical object model to efficiently compute a coarse solution which then guides search at the fine level.

Computer Vision Lab. CVPR 2014 Webpage - Accepted Papers. CVPR 2014 papers on the web - Papers. Accepted Orals Reconstructing Storyline Graphs for Image Recommendation from Web Community Photos (project, PDF) Gunhee Kim* (Disney Research), Eric Xing (Carnegie Mellon University) Www.cs.toronto.edu/~ucacsjp/

A Blog From a Human-engineer-being — Eren Golge's Blog A Blog From a Human-engineer-being. Computer Science 294: Practical Machine Learning. FAQ: What machine learning book should I start with? I run across this question a lot and I am sometimes asked it directly. It came up again on the discussion board for Stanford's new online introductory Machine Learning course. I gave the following answer and it was well received so I thought I'd post it here as well (since that forum is not publicly available).

The answer, as in all things, is that "it depends". What makes a good starting book is dependent on your background (specifically in math) and what your end goal is. Computer Science 294: Practical Machine Learning. Wise set of machine learning resources abou A Blog From a Human-engineer-being. (358) Machine Learning: What are some good resources for learning about machine learning? Energy Minimization with Graph Cuts. Graph Cuts can be used to conveniently minimize energies often used in computer vision. This page is a quick summary of Boykov, Veksler, and Zabih paper "Fast Approximate Energy Minimization via Graph Cuts".

Energies that can be minimized are described, then two minimization algorithms are summarized, alpha-expansion and alpha-beta swap, and finally two practical examples are shown. Graph Cuts finds the optimal solution to a binary problem. However when each pixel can be assigned many labels, finding the solution can be computationally expensive.

For the following type of energy, a serie of graph cuts can be used to find a convenient local minimum: The first term is known as the data term. Is coherent with the observed data. Cms.brookes.ac.uk/staff/PhilipTorr/Papers/2007/PAMI_submission.pdf. Philip Torr's Home Page. Cvc-komodakis.centrale-ponts.fr. Software. An implementation of the "MP-BCFW" algorithm described in. Courses.engr.illinois.edu/cs543/sp2011/lectures/Lecture 12 - MRFs and Graph Cut Segmentation - Vision_Spring2011.pdf. Courses.engr.illinois.edu/cs543/sp2011/lectures/Lecture 12 - MRFs and Graph Cut Segmentation - Vision_Spring2011.pdf. Discriminatively Trained Deformable Part Models (Release 5)

People.csail.mit.edu/myungjin/publications/treeContext.pdf. Www.bmva.org/bmvc/2012/BMVC/paper081/paper081.pdf. ▶ Understanding Visual Scenes. One remarkable aspect of human vision is the ability to understand the meaning of a novel image or event quickly and effortlessly. Within a single glance, we can comprehend the semantic category of a place, its spatial layout as well as identify many of the objects that compose the scene. Approaching human abilities at scene understanding is a current challenge for computer vision systems. The field of scene understanding is multidisciplinary, involving a growing collection of works in computer vision, machine learning, cognitive psychology, and neuroscience.

In this tutorial, we will focus on recent work on computer vision attempting to solve the tasks of scene recognition and classification, visual context representation, object recognition in context, drawing parallelism with work in psychology and neuroscience. Devising systems that solve scene and object recognition in an integrated fashion will lead towards more efficient and robust artificial visual understanding systems. Recognizing and Learning Object Categories. Simple object detector with boosting. Examples. Saliency Benchmark. Personal Robotics: Scene Understanding from RGB-D data. Semantic Scene Labeling for Personal Robots. Chad Jenkins » Blog Archive » rosbridge: Beyond a Single Robot Cloud Engine. Saliency Benchmark. Antonio Torralba. Spatial envelope.

Abstract: In this paper, we propose a computational model of the recognition of real world scenes that bypasses the segmentation and the processing of individual objects or regions. The procedure is based on a very low dimensional representation of the scene, that we term the Spatial Envelope. We propose a set of perceptual dimensions (naturalness, openness, roughness, expansion, ruggedness) that represent the dominant spatial structure of a scene. Simple object detector with boosting. Publications « Nathan Silberman. Sanja Fidler. People.csail.mit.edu/lim/paper/SketchTokens_cvpr13.pdf. Home. Ttic.uchicago.edu/~fidler/papers/fidler_et_al_nips12.pdf.

Publications « Nathan Silberman. Ttic.uchicago.edu/~fidler/papers/cvpr12seg.pdf. Publications in reverse chronological order. Personal Robotics: Projects. Saliency Benchmark. Contextual guidance of eye movements. Simple object detector with boosting. Spatial envelope. Antonio Torralba. Spatial envelope. Ttic.uchicago.edu/~fidler/papers/cvpr12seg.pdf. Sanja Fidler. Ttic.uchicago.edu/~fidler/papers/cvpr12seg.pdf.

Ttic.uchicago.edu/~fidler/papers/fidler_et_al_nips12.pdf. Ttic.uchicago.edu/~fidler/papers/Schwing_etal2013.pdf. Saliency Benchmark. Deep Learning for Saliency. Visual attention is the ability to select visual stimuli that are most behaviorally relevant among the many others. It allows us to allocate our limited processing resources to the most informative part of the visual scene. In this work, we learn general high-level concepts with the aid of selective attention in a multi-layer deep network. Greedy layer-wise training is applied to learn mid- and high- level features from salient regions of images.

The network is demonstrated to be able to successfully learn meaningful high-level concepts such as faces and texts in the third-layer and mid-level features like junctions, textures, and parallelism in the second-layer. Unlike object detectors that are recently included in saliency models to predict semantic saliency, the higher-level features we learned are general base features that are not restricted to one or few object categories. Paper: Code: Model with Training Data Results on MIT Fixation Dataset Results on FIFA Dataset. Deep Learning for Saliency. A Benchmark of Computational Models of Saliency to Predict Human Fixations. Saliency Benchmark. Personal Robotics: Projects. Personal Robotics: Scene Understanding from RGB-D data.

Personal Robotics: Human Activity Anticipation. | Detection Project Overview | Anticipation Project Overview | Data/Code | Results | An important aspect of human perception is anticipation, which we use extensively in our day-to-day activities when interacting with other humans as well as with our surroundings. Anticipating which activities will a human do next (and how to do them) can enable an assistive robot to plan ahead for reactive responses in the human environments.

Furthermore, anticipation can even improve the detection accuracy of past activities. In this work, we represent each possible future using an anticipatory temporal conditional random field (ATCRF) that models the rich spatial-temporal relations through object affordances. We then consider each ATCRF as a particle and represent the distribution over the potential futures using a set of particles. Contextually Guided Semantic Labeling and Search by Personal Robots. Efficient Multi-View Object Recognition and Pose Estimation. MOPED Object recognition and pose estimation in HD Video. Johns Hopkins Computer Vision Machine Learning. Simultaneous Estimation of an Object's Class, Pose, and 3D Reconstruction from a Single Image Main Ideas. Detecting Potential Falling Objects by Inferring Human Action and Natural Disturbance - Yibiao Zhao. Redirecting. Detecting Potential Falling Objects by Inferring Human Action and Natural Disturbance - Yibiao Zhao. Detecting Potential Falling Objects by Inferring Human Action and Natural Disturbance - Yibiao Zhao.

Brown University Robotics. Brown University Robotics.