EURECOM Kinect Face Dataset. Facewarehouse-tr.pdf. EURECOM Kinect Face Dataset. RGB-D Face database. Database description: The very first step in many facial analysis systems is face detection.

Though face detection has been studied for many years, there is not still a benchmark public database to be widely accepted among researchers for which both color and depth information are obtained by the same sensor. Repository of robotics and computer vision datasets. The Affect Analysis Group at Pittsburgh. Cohn-Kanade AU-Coded Expression Database The Cohn-Kanade AU-Coded Facial Expression Database is for research in automatic facial image analysis and synthesis and for perceptual studies.

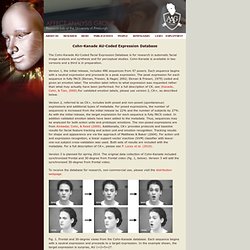

Cohn-Kanade is available in two versions and a third is in preparation. Version 1, the initial release, includes 486 sequences from 97 posers. Each sequence begins with a neutral expression and proceeds to a peak expression. The peak expression for each sequence in fully FACS (Ekman, Friesen, & Hager, 2002; Ekman & Friesen, 1979) coded and given an emotion label. Version 2, referred to as CK+, includes both posed and non-posed (spontaneous) expressions and additional types of metadata. Version 3 is planned for spring 2014. To receive the database for research, non-commercial use, please visit the distribution webpage. Fig. 1. References: Matthews, I., & Baker, S. (2004). FaceWarehouse. Introduction FaceWarehouse is a database of 3D facial expressions for visual computing applications.

Using a Kinect RGBD camera, we captured 150 individuals aged 7-80 from various ethnic backgrounds. For each person, we captured the RGBD data of her different expressions, including the neutral expression and 19 other expressions such as mouth-opening, smile, kiss, etc. For every RGBD raw data record, a set of facial feature points on the color image such as eye corners, mouth contour and the nose tip are automatically localized, and manually adjusted if better accuracy is required. 3D Mask Attack Dataset — DDP. The 3D Mask Attack Database (3DMAD) is a biometric (face) spoofing database.

It currently contains 76500 frames of 17 persons, recorded using Kinect for both real-access and spoofing attacks. Each frame consists of: a depth image (640x480 pixels – 1x11 bits)the corresponding RGB image (640x480 pixels – 3x8 bits)manually annotated eye positions (with respect to the RGB image). The data is collected in 3 different sessions for all subjects and for each session 5 videos of 300 frames are captured.

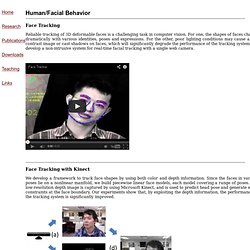

The recordings are done under controlled conditions, with frontal-view and neutral expression. Sample frames from three different session for a subject In each video, the eye-positions are manually labelled for every 1st, 61st, 121st, 181st, 241st and 300th frames and they are linearly interpolated for the rest. Junzhou Huang. Face Tracking Reliable tracking of 3D deformable faces is a challenging task in computer vision.

For one, the shapes of faces change dramatically with various identities, poses and expressions. For the other, poor lighting conditions may cause a low contrast image or cast shadows on faces, which will significantly degrade the performance of the tracking system. IMPCA - Datasets. ETHZ - Computer Vision Lab: Datasets. Find below a selection of datasets maintained by us.

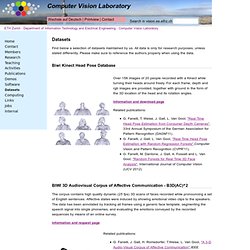

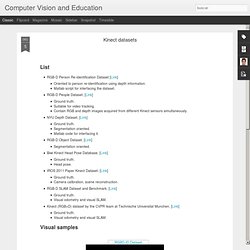

All data is only for research purposes, unless stated differently. Please make sure to reference the authors properly when using the data. Biwi Kinect Head Pose Database BIWI 3D Audiovisual Corpus of Affective Communication - B3D(AC)^2 The corpus contains high quality dynamic (25 fps) 3D scans of faces recorded while pronouncing a set of English sentences. Information and request page BIWI Walking Pedestrians dataset Walking pedestrians in busy scenarios from a bird eye view. ETHZ Shape Classes. Computer Vision and Education: Kinect datasets. This astonishing seminar, called "Accidental Pinhole and Pinspeck Cameras: revealing the scene outside the picture", gives a refreshing view of the concept of shadows: A shadow is also a form of accidental image.

The shadow of an object is all the light that is missing because of the object presence in the scene. If we were able to extract the light that is missing (that is the difference between when the object is absent from the scene and when the object is present) we would get an image. That image would be the negative of the shadow and it will be approximatively equivalent to the image produced by a pinhole camera with a pinhole with the shape of the occluder.

Therefore, a shadow is not just a dark region around an object. I totally recommended you to watch the video seminar [Link], which is given by Antonio Torralba from the MIT university. Face Recognition Homepage - Databases. When benchmarking an algorithm it is recommendable to use a standard test data set for researchers to be able to directly compare the results.

While there are many databases in use currently, the choice of an appropriate database to be used should be made based on the task given (aging, expressions, lighting etc). Another way is to choose the data set specific to the property to be tested (e.g. how algorithm behaves when given images with lighting changes or images with different facial expressions). If, on the other hand, an algorithm needs to be trained with more images per class (like LDA), Yale face database is probably more appropriate than FERET. Read more: R. Link Testing protocols: Face Image ISO Compliance Verification Benchmark Area - FVC-onGoing is a web-based automated evaluation system developed to evaluate biometric algorithms. P. Download here, 407 kB A. Download here, 406 kB. RGB-D Face database.