The Tin Hat | Simple Online Privacy Guides and Tutorials TestGrid – tahoe-lafs This page is about the "Public Test Grid", which is also called the "pubgrid". The pubgrid has several purposes: to make it easier for people new to tahoe to begin to experiment to enable small-scale trial use of tahoe to help the tahoe community gain experience with grids of heterogenous servers without a pre-existing social organization The pubgrid also has two critical non-goals The pubgrid is not intended to provide large-scale storage, and it is not intended to be reliable. How To Connect To The Public Test Grid ¶ The test grid is subject to being updated at random times, so compatibility is likely to break without notice. The test grid is currently running an 1.0.0-compatible release (allmydata-tahoe: 1.9.2) (see "My versions" on the welcome page for the current version of the web gateway server). Set up the code according to docs/quickstart.rst and docs/running.rst. Then run bin/tahoe start. Publicly writable test directory ¶ Social Norms ¶ Suggested server setup: Status ¶ History ¶

MediaGoblin:: GNU MediaGoblin File Sharing Software - Network & Internet | Linux Software - Page 1 | Date directKonnect is a Neo Modus compatible file sharing client for KDE. directKonnect supports many of the features that can be expected from a modern Direct Connect client such as: Multiple hub connections, tiger tree hashes, UTF8 encoded file lists and much more. See features for more information.... Platforms: *nix, Linux Keyword: Client, Compatible, Connect, Connections, Direct, Directkonnect, Encoded, Expected, Features, Hashes, Information, Lists, Modern, Modus, Multiple, Sharing, Supports, Tiger

JStylo-Anonymouth - PSAL From PSAL The JStylo and Anonymouth integrated open-source project (JSAN) resides on GitHub. What is JSAN? JSAN is a writing style analysis and anonymization framework. It consists of two parts: JStylo - authorship attribution framework Anonymouth - authorship evasion (anonymization) framework JStylo is used as an underlying feature extraction and authorship attribution engine for Anonymouth, which uses the extracted stylometric features and classification results obtained through JStylo and suggests users changes to anonymize their writing style. Details about JSAN: Use Fewer Instances of the Letter "i": Toward Writing Style Anonymization. Tutorial JSAN tutorial: Presented at 28c3 video Download Downloads: If you use JStylo and/or Anonymouth in your research, please cite: Andrew McDonald, Sadia Afroz, Aylin Caliskan, Ariel Stolerman and Rachel Greenstadt. If you use the corpus in your research, please cite: Michael Brennan and Rachel Greenstadt. Developers

P2P-like Tahoe filesystem offers secure storage in the cloud Tahoe is a secure distributed filesystem that is designed to conform with the principle of least authority. The developers behind the project announced this month the release of version 1.5, which includes bugfixes and improvements to portability and performance, including a 10 percent boost to file upload speed over high-latency connections. Tahoe's underlying architecture is similar to that of a peer-to-peer network. Tahoe was originally developed with funding from Allmydata, a company that provides Web backup services. The idea was that every user would be able to get the benefits of distributed off-site backups by sharing a portion of their local drive space with the rest of the network. When a file is deployed to Tahoe, it is encrypted and split into pieces that are spread out across ten separate nodes. Although Tahoe is a distributed filesystem, it is not entirely decentralized. Tahoe is being used in a number of different ways. Listing image by Wikimedia Commons

Unhosted: separating web apps from data storage Woof - Simple Web-based File Sharing | Linux Poison Transferring files from one computer to another on a network isn't always a straightforward task. Equipping networks with a file server or FTP server or common web server is one way to simplify the process of exchanging files, but if you need a simpler yet efficient method, try Woof -- short for Web Offer One File. It's a small Python script that facilitates transfer of files across networks and only requires the recipient of the files have a Web browser. Woof tries a different approach. It assumes that everybody has a web-browser or a command-line web-client installed. Woof is a small simple stupid web-server that can easily be invoked on a single file. $ woof filename and tell the recipient the URL woof spits out. Installation: OpenSuSe user can install Woof using "1-click" installer - here Usage: Using Woof is really very simple, provide a valid ip address (your machine ip), port and filename that you wanted to share with other. $ woof -i 192.168.1.2 -p 8888 testfile.txt

The 20 Coolest Jobs in Information Security #1 Information Security Crime Investigator/Forensics Expert#2 System, Network, and/or Web Penetration Tester#3 Forensic Analyst#4 Incident Responder#5 Security Architect#6 Malware Analyst#7 Network Security Engineer#8 Security Analyst#9 Computer Crime Investigator#10 CISO/ISO or Director of Security#11 Application Penetration Tester#12 Security Operations Center Analyst#13 Prosecutor Specializing in Information Security Crime#14 Technical Director and Deputy CISO#15 Intrusion Analyst#16 Vulnerability Researcher/ Exploit Developer#17 Security Auditor#18 Security-savvy Software Developer#19 Security Maven in an Application Developer Organization#20 Disaster Recovery/Business Continuity Analyst/Manager #1 - Information Security Crime Investigator/Forensics Expert - Top Gun Job The thrill of the hunt! You never encounter the same crime twice! Job Description SANS Courses Recommended Why It's Cool How It Makes a Difference How to Be Successful - Stay abreast of the latest attack methodologies.

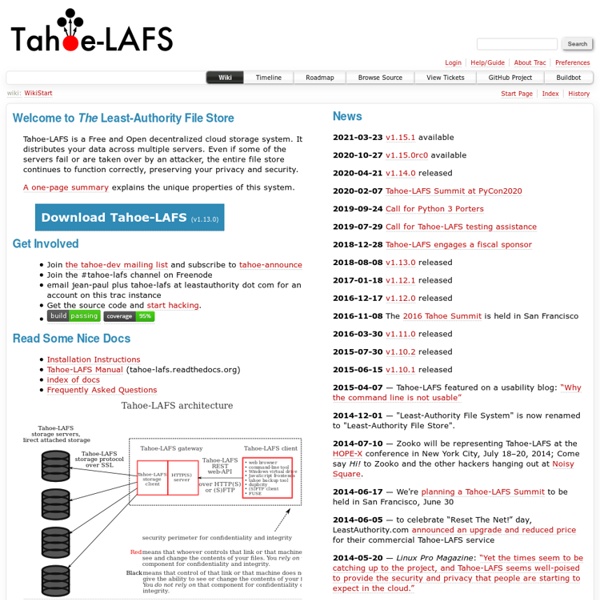

Tahoe Least-Authority Filesystem Tahoe-LAFS (Tahoe Least-Authority Filesystem) is a free and open, secure, decentralized, fault-tolerant, peer-to-peer distributed data store and distributed file system.[4][5] It can be used as an online backup system, or to serve as a file or web host similar to Freenet,[6] depending on the front-end used to insert and access files in the Tahoe system. Tahoe can also be used in a RAID-like manner to use multiple disks to make a single large RAIN[7] pool of reliable data storage. Zooko Wilcox-O'Hearn is one of the developers.[8][9] Fork[edit] A patched Tahoe-LAFS exist (since 2011) made to run on the anonymous network I2P, with support for multiple introducers. See also[edit] Notes[edit] References[edit] Haver, Eirik; Melvold, Eivind and Ruud, Pål (23 September 2011). External links[edit]