Reed's law

Reed's law is the assertion of David P. Reed that the utility of large networks, particularly social networks, can scale exponentially with the size of the network. The reason for this is that the number of possible sub-groups of network participants is 2N − N − 1, where N is the number of participants. the number of participants, N, orthe number of possible pair connections, N(N − 1)/2 (which follows Metcalfe's law). so that even if the utility of groups available to be joined is very small on a peer-group basis, eventually the network effect of potential group membership can dominate the overall economics of the system. Derivation[edit] Quote[edit] From David P. "[E]ven Metcalfe's law understates the value created by a group-forming network [GFN] as it grows. Criticism[edit] Other analysts of network value functions, including Andrew Odlyzko and Eric S. See also[edit] References[edit] External links[edit]

Thinking Networks II

First presented to The Developing Group on 3 June 2006 (an earlier version, Thinking Networks I, was presented on on 5 June 2004) Thinking Networks II James Lawley Contents 1. a. 4. Note: There are three types of description in this paper: i. ii. iii. 1. I'll start with a tribute to Fritjof Capra who made an early and significant contribution to bringing the importance of networks to my attention when he asked: "Is there a common pattern of organization that can be identified in all living systems? The kinds of networks we shall be considering are complex adaptive or complex dynamic networks. However, none of this would be very interesting if it wasn't for the fact that inspite of their complexity, inspite of the adaptive and dynamic nature of these networks, recent research has shown them to have remarkably consistentpatterns of organisation. "We're accustomed to thinking in terms of centralized control, clear chains of command, the straightforward logic of cause and effect.

Triangular number

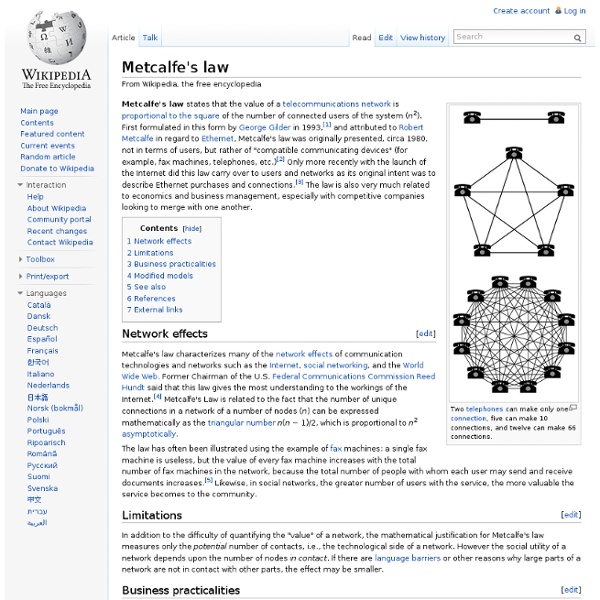

The first six triangular numbers A triangular number or triangle number counts the objects that can form an equilateral triangle, as in the diagram on the right. The nth triangle number is the number of dots composing a triangle with n dots on a side, and is equal to the sum of the n natural numbers from 1 to n. The triangle numbers are given by the following explicit formulas: where is a binomial coefficient. The triangular number Tn solves the "handshake problem" of counting the number of handshakes if each person in a room with n + 1 people shakes hands once with each person. Triangle numbers are the additive analog of the factorials, which are the products of integers from 1 to n. The number of line segments between closest pairs of dots in the triangle can be represented in terms of the number of dots or with a recurrence relation: In the limit, the ratio between the two numbers, dots and line segments is Relations to other figurate numbers[edit] with and where T is a triangular number.

Casual Observation from Fractal Network Growth Experiments

This happens to be one of those rare instances where the benefit of hindsight does not make me regret something said flippantly on a panel. I deeply believe that in order to truly change the world we cannot simply "throw analytics at the problem." To that end, the medical and health industries are perhaps the most primed to be disrupted by data and analytics. It is incredibly exciting to be at an organization that is both working within the current framework of health care and data to create new insight for people, but also pushing the envelope with respect to individuals' relationships with their own health. I feel lucky to have an opportunity to move into the health data space now. Sensor data The past decade of development in "big data" has -- in large part -- been built on top of the need to understand web logs files. We have built technology and algorithms to understand the Web, and we have done a great job. Strength of team Here's to the next adventure!

Visualization and evolution of the scientific structure of fuzzy sets research in Spain

A.G. López-Herrera, M.J. Cobo, E. Herrera-Viedma, F. R. E. Introduction Fuzzy set theory, which was founded by Zadeh (1965), has emerged as a powerful way of representing quantitatively and manipulating the imprecision in problems. In Spain, the first paper on fuzzy set theory was published by Trillas and Riera (1978). According ISI Web of Science, from 1965 more than one hundred thousand papers on fuzzy set theory foundations and applications have been published in journals. In this paper, the first bibliometric study is presented analysing the research carried out by the Spanish fuzzy set community. The study reveals the main themes treated by the Spanish fuzzy set theory community from 1978 to 2008. they must be in the top ten of the most productive countries (according to data in the ISI Web of Science); just two countries for each geographical area (America, Europe and Asia) are considered; and their first paper on the topic had to be published before 1980 (inclusive). Methods