Doger.io - wow such container. Powerstrip-flocker: Portable volumes using just the Docker CLI · ClusterHQ. Migration of data between hosts in a Docker cluster is a serious challenge.

Until now a Docker data volume running on a server has been stuck there without powerful tooling for moving it along with its container to a new host. Despite how easy it is to spin up a Dockerized database, the difficulty of managing where the data resides has meant that most of us haven’t yet containerized our data services. The good news is that today, using nothing more than the Docker client, we can now migrate our data volumes between servers, starting the process of containerizing our database, queue and key-value store microservices.

Before seeing exactly how we can do this, let’s look at a simple example. Quick fire example of Docker data volume migration Let’s say we have 2 hosts, node1 and node2. Node1$ sudo docker run -v /flocker/test001:/data \ ubuntu sh -c "echo powerflock > /data/file.txt" This runs an application which will write some data to the volume. Powerstrip-flocker combines two technologies: Docker Without Docker. Recurse Center 16 April 2015 Some common questions.

A production ready Docker workflow. Part 2: The Storage Problem. A few days ago I wrote about how we at IIIEPE started using Docker in production.

It really changed the way we develop and deploy web based applications. In this series of posts I'll keep writing about our experience and the problems we faced and how we solved them. The last post didn't have as much context as you'd probably needed to understand why we did what we did, but if you're still reading, allow me to explain. IIIEPE is a Government Institute and we love Open Source. We develop most of our projects using Drupal and Node.js and we have our own Datacenter. As we began planning the migration and the new workflow, we created a wish list which included replication of 100% of our websites to reduce downtime to a minimum during server maintenance, even the HTML websites that don't receive too much traffic would be running on at least 2 instances running on different VMs.

A production ready Docker workflow. Docker is now 2 years old this week and here at IIIEPE have been using it in production for about 3 months.

I want to share our experience and the workflow we had to design. We run several websites using Drupal, PHP and Node.js among others, and the goal we had was to run all of our applications with Docker, so we designed the following workflow: All developers use Docker to create the application. Our Gitlab instance has Webhooks configured, so when a new push is detected, it will order Jenkins to run a task. Each Jenkins task includes the same layout: clone the latest code from gitlab, run tests, login to our private Docker registry, build a new image with the latest code and then push the image to it. Each of those steps required several days of planning, testing and work to design the basic guidelines. The first thing we did was build base images that we could use for our own needs. Docker and S6 – My New Favorite Process Supervisor.

In my previous blog post, I wrote about how I like to use a process supervisor in my containers, and rattled a few off.

I decided I ought to expand a bit on one in particular – S6, by Laurent Bercot. Why S6, why not Supervisor? I know a lot of people have been using Supervisor in their containers, and it’s a great system! It’s very easy to learn and has a lot of great features. Phusion produces a very popular, very solid base image for Ubuntu based around Supervisor Runit.

UPDATE 2014-12-09: I mistakenly wrote that Phusion uses Supervisor, when they in fact use Runit. Jonathan Bergknoff: Building good docker images. The docker registry is bursting at the seams.

At the time of this writing, a search for "node" gets just under 1000 hits. How does one choose? Fig.yml reference. Each service defined in fig.yml must specify exactly one of image or build.

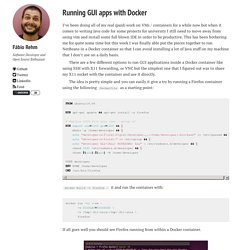

Other keys are optional, and are analogous to their docker run command-line counterparts. As with docker run, options specified in the Dockerfile (e.g. CMD, EXPOSE, VOLUME, ENV) are respected by default - you don't need to specify them again in fig.yml. image Tag or partial image ID. Eight Docker Development Patterns. Running GUI apps with Docker – Fábio Rehm. I’ve been doing all of my real (paid) work on VMs / containers for a while now but when it comes to writing Java code for some projects for university I still need to move away from using vim and install some full blown IDE in order to be productive.

This has been bothering me for quite some time but this week I was finally able put the pieces together to run NetBeans in a Docker container so that I can avoid installing a lot of Java stuff on my machine that I don’t use on a daily basis. There are a few different options to run GUI applications inside a Docker container like using SSH with X11 forwarding, or VNC but the simplest one that I figured out was to share my X11 socket with the container and use it directly. The idea is pretty simple and you can easily it give a try by running a Firefox container using the following Dockerfile as a starting point: