22. Generators. 22.1 Overview Generators, a new feature of ES6, are functions that can be paused and resumed (think cooperative multitasking or coroutines).

That helps with many applications. Two important ones are: Implementing iterables Blocking on asynchronous function calls The following subsections give brief overviews of these applications. 22.1.1 Implementing iterables via generators The following function returns an iterable over the properties of an object, one [key, value] pair per property: // The asterisk after `function` means that// `objectEntries` is a generatorfunction* objectEntries(obj) { const propKeys = Reflect.ownKeys(obj); for (const propKey of propKeys) { // `yield` returns a value and then pauses // the generator.

ObjectEntries() is used like this: How exactly objectEntries() works is explained in a dedicated section. 22.1.2 Blocking on asynchronous function calls In the following code, I use the control flow library co to asynchronously retrieve two JSON files. Installation - simple-openni - How to install. - A simple OpenNI wrapper for processing. Kinect Physics Tutorial for Processing. Therenect - A virtual Theremin for the Kinect Controller. The Therenect is a virtual Theremin for the Kinect controller.

It defines two virtual antenna points, which allow controlling the pitch and volume of a simple oscillator. The distance to these points can be adjusted by freely moving the hand in three dimensions or by reshaping the hand, which allows gestures that should be quite similar to playing an actual Theremin. This video was recorded prior to this release, an updated video introducing the improved features of the current version will follow soon. Configuration. Skeleton Tracking with the Kinect - Learning. This tutorial will explain how to track human skeletons using the Kinect.

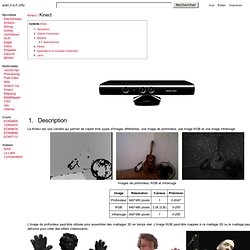

The OpenNI library can identify the position of key joints on the human body such as the hands, elbows, knees, head and so on. Kinect / Kinect. La Kinect est une caméra qui permet de capter trois types d'images différentes, une image de profondeur, une image RGB et une image infrarouge: Images de profondeur, RGB et infrarouge L'image de profondeur peut-être utilisée pour assembler des maillages 3D en temps réel.

L'image RGB peut-être mappée à ce maillage 3D ou le maillage peut-être déformé pour créer des effets intéressants: En appliquant des modules d'analyses à l'image de profondeur, il est possible de poursuivre plusieurs interacteurs et d'interpréter leurs mouvements: Identifying People in a Scene with the Kinect - Learning. We'll start with the sketch we wrote to draw the depth image from the Kinect in Drawing Depth with the Kinect: import SimpleOpenNI.*; SimpleOpenNI context; void setup(){ // instantiate a new context context = new SimpleOpenNI(this); // enable depth image generation context.enableDepth(); // create a window the size of the scene size(context.depthWidth(), context.depthHeight()); } void draw(){ // update the camera context.update(); // draw depth image image(context.depthImage(),0,0); }

ITP Spring Show 2011 » Capturing Dance: Exploring Movement with Computer Vision. We've created a series of software "tools" for capturing and manipulating Kinect footage of a live dancer through sound and gesture cues.

With these tools, we've produced a set of pre-recorded videos that explore each of these tools in a short choreographic "study. " Each study touches upon a different aspect of using visual imagery to underline and transform the live dance performance. We've also begun to experiment with manipulating the abstracted, kinect imagery through sound as a way of visualizing the interaction between musician and dancer. BackgroundKinect Sound libraries in Processing: Sonia, Minim Audience Kids and Adults. OpenKinect. OpenKinect. Skeleton tracking with the Kinect and OSCulator. Use Kinect with Mac OSX. COS429: Computer Vision. Overview: On your one-minute walk from the coffee machine to your desk each morning, you pass by dozens of scenes – a kitchen, an elevator, your office – and you effortlessly recognize them and perceive their 3D structure.

But this one-minute scene-understanding problem has been an open challenge in computer vision, since the field was first established 50 years ago. In this class, we will learn the state-of-the-art algorithms, and study how to build computer systems that automatically understand visual scenes, both inferring the semantics and extracting 3D structure. This course requires programming experience as well as basic linear algebra.

Previous knowledge of visual computing will be helpful. COS429: Computer Vision. Computer Vision: Algorithms and Applications. © 2010 Richard Szeliski Welcome to the Web site ( for my computer vision textbook, which you can now purchase at a variety of locations, including Springer (SpringerLink, DOI), Amazon, and Barnes & Noble.

The book is also available in Chinese and Japanese (translated by Prof. Toru Tamaki). This book is largely based on the computer vision courses that I have co-taught at the University of Washington (2008, 2005, 2001) and Stanford (2003) with Steve Seitz and David Fleet. You are welcome to download the PDF from this Web site for personal use, but not to repost it on any other Web site. The PDFs should be enabled for commenting directly in your viewer. If you have any comments or feedback on the book, please send me e-mail.

This Web site will also eventually contain supplementary materials for the textbook, such as figures and images from the book, slides sets, pointers to software, and a bibliography. Computer Vision: Algorithms and Applications. Arduino Tutorials. Tutorials. Arduino Programming Reference.