Deep Learning Studio - Desktop - DeepCognition.ai. Free Machine Learning eBooks - March 2017. By Shai Ben-David and Shai Shalev-Shwartz Machine learning is one of the fastest growing areas of computer science, with far-reaching applications.

The aim of this textbook is to introduce machine learning, and the algorithmic paradigms it offers, in a principled way. The book provides an extensive theoretical account of the fundamental ideas underlying machine learning and the mathematical derivations that transform these principles into practical algorithms. OpenNN start. OpenNN start In this tutorial you'll learn how to start using OpenNN: Where can I find information about it?

Where can I download the library? How can I get support and training? What are the main advantages of using OpenNN? What is the different between OpenNN and Neural Designer? Contents: 1. OpenNN (Open Neural Networks Library) is a software library written in the C++ programming language which implements neural networks, a main area of deep learning research..

OpenNN implements data mining methods as a bundle of functions. The main advantage of OpenNN is its high performance. 2. The library is open source, hosted at SourceForge and GitHub. You can download OpenNN at sourceforge here or at GitHub here. 3. OpenNN's documentation, tutorials, and guides are constantly evolving. Documentation is composed by documents, journal articles, book chapters and conference proceedings, in order to give you a complete overview about the source library. PCA and ICA Package - File Exchange - MATLAB Central. LibICA - ICA library. FastICA C implementation Martin Tůma Description libICA is an C library that implements the FastICA [1] algorithm for Independent Component Analysis (ICA).

It is based on the CRAN fastICA [2] package for R. Synopsis #include <libICA.h> void fastICA(double** X, int rows, int cols, int compc, double** K, double** W, double** A, double** S); Parametes: pre-processed data matrix [rows, cols] compc number of components to be extracted pre-whitening matrix that projects data onto the first compc principal components estimated un-mixing matrix estimated mixing matrix estimated source matrix Details.

Research Blog: Open sourcing the Embedding Projector: a tool for visualizing high dimensional data. Posted by Daniel Smilkov and the Big Picture group Recent advances in Machine Learning (ML) have shown impressive results, with applications ranging from image recognition, language translation, medical diagnosis and more.

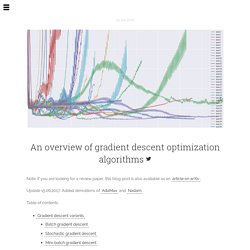

With the widespread adoption of ML systems, it is increasingly important for research scientists to be able to explore how the data is being interpreted by the models. However, one of the main challenges in exploring this data is that it often has hundreds or even thousands of dimensions, requiring special tools to investigate the space. To enable a more intuitive exploration process, we are open-sourcing the Embedding Projector, a web application for interactive visualization and analysis of high-dimensional data recently shown as an A.I. Experiment, as part of TensorFlow. We are also releasing a standalone version at projector.tensorflow.org, where users can visualize their high-dimensional data without the need to install and run TensorFlow. An overview of gradient descent optimization algorithms. Note: If you are looking for a review paper, this blog post is also available as an article on arXiv.

Update 15.06.2017: Added derivations of AdaMax and Nadam. Table of contents: Gradient descent is one of the most popular algorithms to perform optimization and by far the most common way to optimize neural networks. At the same time, every state-of-the-art Deep Learning library contains implementations of various algorithms to optimize gradient descent (e.g. lasagne's, caffe's, and keras' documentation). Jupyter Notebook Viewer. 9 Key Deep Learning Papers, Explained. If you are interested in understanding the current state of deep learning, this post outlines and thoroughly summarizes 9 of the most influential contemporary papers in the field.

By Adit Deshpande, UCLA. Introduction In this post, we’ll go into summarizing a lot of the new and important developments in the field of computer vision and convolutional neural networks. We’ll look at some of the most important papers that have been published over the last 5 years and discuss why they’re so important. The first half of the list (AlexNet to ResNet) deals with advancements in general network architecture, while the second half is just a collection of interesting papers in other subareas. 1. The one that started it all (Though some may say that Yann LeCun’s paper in 1998 was the real pioneering publication). Research Blog: Graph-powered Machine Learning at Google.

Posted by Sujith Ravi, Staff Research Scientist, Google Research Recently, there have been significant advances in Machine Learning that enable computer systems to solve complex real-world problems.

One of those advances is Google’s large scale, graph-based machine learning platform, built by the Expander team in Google Research. A technology that is behind many of the Google products and features you may use everyday, graph-based machine learning is a powerful tool that can be used to power useful features such as reminders in Inbox and smart messaging in Allo, or used in conjunction with deep neural networks to power the latest image recognition system in Google Photos.

.. C++ Library for Audio and Music. SVM - Understanding the math - Part 1 - The margin - SVM Tutorial.