Computer programming. Overview[edit] Within software engineering, programming (the implementation) is regarded as one phase in a software development process.

There is an on-going debate on the extent to which the writing of programs is an art form, a craft, or an engineering discipline.[3] In general, good programming is considered to be the measured application of all three, with the goal of producing an efficient and evolvable software solution (the criteria for "efficient" and "evolvable" vary considerably). The discipline differs from many other technical professions in that programmers, in general, do not need to be licensed or pass any standardized (or governmentally regulated) certification tests in order to call themselves "programmers" or even "software engineers.

" Because the discipline covers many areas, which may or may not include critical applications, it is debatable whether licensing is required for the profession as a whole. History[edit] Some of the earliest computer programmers were women. Programming paradigm. A programming paradigm is a fundamental style of computer programming, a way of building the structure and elements of computer programs.

Capablities and styles of various programming languages are defined by their supported programming paradigms; some programming languages are designed to follow only one paradigm, while others support multiple paradigms. There are six main programming paradigms: imperative, declarative, functional, object-oriented, logic and symbolic programming.[1][2][3] Object-oriented programming. Overview[edit] Rather than structure programs as code and data, an object-oriented system integrates the two using the concept of an "object".

An object has state (data) and behavior (code). Objects correspond to things found in the real world. Programming Paradigms (Stanford) Logic programming. Functional programming. Imperative programming. The term is used in opposition to declarative programming, which expresses what the program should accomplish without prescribing how to do it in terms of sequences of actions to be taken.

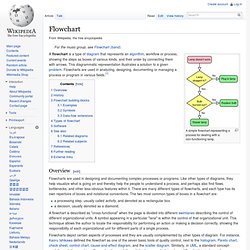

Functional and logic programming are examples of a more declarative approach. Imperative, procedural, and declarative programming[edit] Procedural programming could be considered a step towards declarative programming. A programmer can often tell, simply by looking at the names, arguments and return types of procedures (and related comments), what a particular procedure is supposed to do, without necessarily looking at the details of how it achieves its result. At the same time, a complete program is still imperative since it 'fixes' the statements to be executed and their order of execution to a large extent. Flowchart. A simple flowchart representing a process for dealing with a non-functioning lamp.

A flowchart is a type of diagram that represents an algorithm, workflow or process, showing the steps as boxes of various kinds, and their order by connecting them with arrows. This diagrammatic representation illustrates a solution to a given problem. Algorithm. Flow chart of an algorithm (Euclid's algorithm) for calculating the greatest common divisor (g.c.d.) of two numbers a and b in locations named A and B.

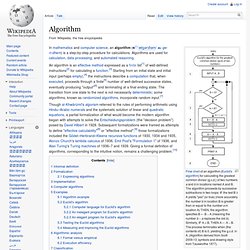

The algorithm proceeds by successive subtractions in two loops: IF the test B ≥ A yields "yes" (or true) (more accurately the numberb in location B is greater than or equal to the numbera in location A) THEN, the algorithm specifies B ← B − A (meaning the number b − a replaces the old b). Similarly, IF A > B, THEN A ← A − B. The process terminates when (the contents of) B is 0, yielding the g.c.d. in A. (Algorithm derived from Scott 2009:13; symbols and drawing style from Tausworthe 1977). Pseudocode. Pseudocode is an informal high-level description of the operating principle of a computer program or other algorithm.

It uses the structural conventions of a programming language, but is intended for human reading rather than machine reading. Pseudocode typically omits details that are not essential for human understanding of the algorithm, such as variable declarations, system-specific code and some subroutines. Programming language. The earliest programming languages preceded the invention of the digital computer and were used to direct the behavior of machines such as Jacquard looms and player pianos.[1] Thousands of different programming languages have been created, mainly in the computer field, and many more still are being created every year.

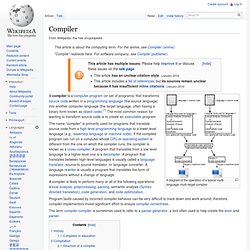

Many programming languages require computation to be specified in an imperative form (i.e., as a sequence of operations to perform), while other languages utilize other forms of program specification such as the declarative form (i.e. the desired result is specified, not how to achieve it). Compiler. A diagram of the operation of a typical multi-language, multi-target compiler A compiler is a computer program (or set of programs) that transforms source code written in a programming language (the source language) into another computer language (the target language, often having a binary form known as object code).[1] The most common reason for wanting to transform source code is to create an executable program.

Program faults caused by incorrect compiler behavior can be very difficult to track down and work around; therefore, compiler implementors invest significant effort to ensure compiler correctness. The term compiler-compiler is sometimes used to refer to a parser generator, a tool often used to help create the lexer and parser. History[edit] Software for early computers was primarily written in assembly language.

Towards the end of the 1950s, machine-independent programming languages were first proposed. Interpreter (computing) Parse the source code and perform its behavior directlytranslate source code into some efficient intermediate representation and immediately execute thisexplicitly execute stored precompiled code[1] made by a compiler which is part of the interpreter system While interpretation and compilation are the two main means by which programming languages are implemented, they are not mutually exclusive, as most interpreting systems also perform some translation work, just like compilers.

The terms "interpreted language" or "compiled language" signify that the canonical implementation of that language is an interpreter or a compiler, respectively. A high level language is ideally an abstraction independent of particular implementations. An illustration of the linking process. Semantics. The formal study of semantics intersects with many other fields of inquiry, including lexicology, syntax, pragmatics, etymology and others. Independently, semantics is also a well-defined field in its own right, often with synthetic properties.[4] In the philosophy of language, semantics and reference are closely connected. Further related fields include philology, communication, and semiotics.

The formal study of semantics can therefore be manifold and complex. Syntax (programming languages) In computer science, the syntax of a computer language is the set of rules that defines the combinations of symbols that are considered to be a correctly structured document or fragment in that language. This applies both to programming languages, where the document represents source code, and markup languages, where the document represents data. The syntax of a language defines its surface form.[1] Text-based computer languages are based on sequences of characters, while visual programming languages are based on the spatial layout and connections between symbols (which may be textual or graphical). Documents that are syntactically invalid are said to have a syntax error. Computer language syntax is generally distinguished into three levels: Words – the lexical level, determining how characters form tokens;Phrases – the grammar level, narrowly speaking, determining how tokens form phrases;Context – determining what objects or variables names refer to, if types are valid, etc.

List of programming languages. The aim of this list of programming languages is to include all notable programming languages in existence, both those in current use and historical ones, in alphabetical order, except for dialects of BASIC and esoteric programming languages. Note: Dialects of BASIC have been moved to the separate List of BASIC dialects. Note: This page does not list esoteric programming languages. List of programming languages by type. Timeline of programming languages. Perl. Though Perl is not officially an acronym,[5] there are various backronyms in use, such as: Practical Extraction and Reporting Language.[6] Perl was originally developed by Larry Wall in 1987 as a general-purpose Unix scripting language to make report processing easier.[7] Since then, it has undergone many changes and revisions. The latest major stable revision of Perl 5 is 5.18, released in May 2013.

Perl 6, which began as a redesign of Perl 5 in 2000, eventually evolved into a separate language. Both languages continue to be developed independently by different development teams and liberally borrow ideas from one another. History[edit] Early versions[edit] Wall began work on Perl in 1987, while working as a programmer at Unisys,[9] and released version 1.0 to the comp.sources.misc newsgroup on December 18, 1987.[14] The language expanded rapidly over the next few years. C (programming language) C is one of the most widely used programming languages of all time,[8][9] and C compilers are available for the majority of available computer architectures and operating systems. C is an imperative (procedural) language. It was designed to be compiled using a relatively straightforward compiler, to provide low-level access to memory, to provide language constructs that map efficiently to machine instructions, and to require minimal run-time support.

Generational list of programming languages.