Software links « Deep Learning. If your software belongs here, email us and let us know.

Getting Started with Deep Learning and Python - PyImageSearch. Update – January 27, 2015: Based on the feedback from commenters, I have updated the source code in the download to include the original MNIST dataset!

No external downloads required! Update – March 2015, 2015: The nolearn package has now deprecated and removed the dbn module. When you go to install the nolearn package, be sure to clone down the repository, checkout the 0.5b1 version, and then install it. Do not install the current version without first checking out the 0.5b1 version!

In the future I will post an update on how to use the updated nolearn package! Deep Learning Bibliography. Deep Learning. Schedule Overview Building intelligent systems that are capable of extracting high-level representations from high-dimensional sensory data lies at the core of solving many AI related tasks, including visual object or pattern recognition, speech perception, and language understanding.

Theoretical and biological arguments strongly suggest that building such systems requires deep architectures that involve many layers of nonlinear processing. Many existing learning algorithms use shallow architectures, including neural networks with only one hidden layer, support vector machines, kernel logistic regression, and many others. The internal representations learned by such systems are necessarily simple and are incapable of extracting some types of complex structure from high-dimensional input. Deep learning from the bottom up. This document was started by Roger Grosse, but as an experiment we have made it publicly editable.

(You need to be logged in to edit.) In applied machine learning, one of the most thankless and time consuming tasks is coming up with good features which capture relevant structure in the data. Deep learning is a new and exciting subfield of machine learning which attempts to sidestep the whole feature design process, instead learning complex predictors directly from the data. Most deep learning approaches are based on neural nets, where complex high-level representations are built through a cascade of units computing simple nonlinear functions.

Probably the most accessible introduction to neural nets and deep learning is Geoff Hinton’s Coursera course. But it’s one thing to learn the basics, and another to be able to get them to work well. If you are new to Metacademy, you can find a bit more about the structure and motivation here. Quoc Le’s Lectures on Deep Learning. Dr.

Quoc Le from the Google Brain project team (yes, the one that made headlines for creating a cat recognizer) presented a series of lectures at the Machine Learning Summer School (MLSS ’14) in Pittsburgh this week. This is my favorite lecture series from the event till now and I was glad to be able to attend them. The good news is that the organizers have made available the entire set of video lectures in 4K for you to watch. But since Dr. Le did most of them on the board and did not provide any accompanying slides, I decided to put the contents of the lectures along with the videos here. In this post I posted Dr. Lecture 1: Neural Networks Review Dr. Contents Lecture 2: NNs in Practice If you have already covered NN in the past then the first lecture may have been a bit dry for you but the real fun begins in this lecture when Dr.

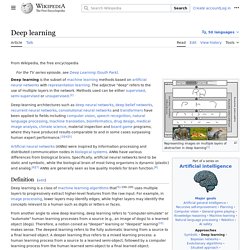

(183) Yoshua Bengio's answer to Deep Learning: What are some fundamental deep learning papers for which code and data is available to reproduce the result and on the way grasp deep learning? Deeplearning:slides:start. Deep learning. Branch of machine learning Deep learning (also known as deep structured learning or differential programming) is part of a broader family of machine learning methods based on artificial neural networks with representation learning.

Learning can be supervised, semi-supervised or unsupervised.[1][2][3] Deep learning architectures such as deep neural networks, deep belief networks, recurrent neural networks and convolutional neural networks have been applied to fields including computer vision, speech recognition, natural language processing, audio recognition, social network filtering, machine translation, bioinformatics, drug design, medical image analysis, material inspection and board game programs, where they have produced results comparable to and in some cases surpassing human expert performance.[4][5][6] Artificial neural networks (ANNs) were inspired by information processing and distributed communication nodes in biological systems.

Definition[edit] Overview[edit] History[edit] Learning deep architectures for {AI} - LISA - Publications - Aigaion 2.0. Where are the Deep Learning Courses? This is a guest post by John Kaufhold.

Dr. Kaufhold is a data scientist and managing partner of Deep Learning Analytics, a data science company based in Arlington, VA. He presented an introduction to Deep Learning at the March Data Science DC. Why aren’t there more Deep Learning talks, tutorials, or workshops in DC2? It’s been about two months since my Deep Learning talk at Artisphere for DC2. First some preemptive answers to the “FAQ” downstream of the talk: Mary Galvin wrote a blog review of this event.Yes, the slides are available.Yes, corresponding audio is also available (thanks Geoff Moes).A recently “reconstructed” talk combining the slides and audio is also now available! There actually was a class… Aaron Schumacher and Tommy Shen invited me to come talk in April for General Assemb.ly’s Data Science course.