Metacognition. Metacognition is defined as "cognition about cognition", or "knowing about knowing".

It comes from the root word "meta", meaning beyond.[1] It can take many forms; it includes knowledge about when and how to use particular strategies for learning or for problem solving.[1] There are generally two components of metacognition: knowledge about cognition, and regulation of cognition.[2] Metamemory, defined as knowing about memory and mnemonic strategies, is an especially important form of metacognition.[3] Differences in metacognitive processing across cultures have not been widely studied, but could provide better outcomes in cross-cultural learning between teachers and students.[4] Some evolutionary psychologists hypothesize that metacognition is used as a survival tool, which would make metacognition the same across cultures.[4] Writings on metacognition can be traced back at least as far as De Anima and the Parva Naturalia of the Greek philosopher Aristotle.[5] Definitions[edit] [edit]

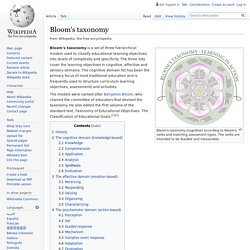

Bloom's taxonomy. Bloom's taxonomy (cognitive) according to Bloom's verbs and matching assessment types.

The verbs are intended to be feasible and measurable. Bloom's taxonomy is a set of three hierarchical models used to classify educational learning objectives into levels of complexity and specificity. The three lists cover the learning objectives in cognitive, affective and sensory domains. The cognitive domain list has been the primary focus of most traditional education and is frequently used to structure curriculum learning objectives, assessments and activities. The models were named after Benjamin Bloom, who chaired the committee of educators that devised the taxonomy. History[edit] Definition of Hive Mind by Merriam-Webster. How Grid Computing Works. A scientist studying proteins logs into a computer and uses an entire network of computers to analyze data.

A businessman accesses his company's network through a PDA in order to forecast the future of a particular stock. An Army official accesses and coordinates computer resources on three different military networks to formulate a battle strategy. All of these scenarios have one thing in common: They rely on a concept called grid computing. At its most basic level, grid computing is a computer network in which each computer's resources are shared with every other computer in the system. Processing power, memory and data storage are all community resources that authorized users can tap into and leverage for specific tasks. The grid computing concept isn't a new one. Though the concept isn't new, it's also not yet perfected. How the Internet of Things Works.

Many of us have dreamed of smart homes where our appliances do our bidding automatically.

The alarm sounds and the coffee pot starts brewing the moment you want to start your day. Lights come on as you walk through the house. Some unseen computing device responds to your voice commands to read your schedule and messages to you while you get ready, then turns on the TV news. Your car drives you to work via the least congested route, freeing you up to get caught up on your reading or prep for your morning meeting while in transit. We've read and seen such things in science fiction for decades, but they're now either already possible or on the brink of coming into being. The Internet of Things (IoT), also sometimes referred to as the Internet of Everything (IoE), consists of all the web-enabled devices that collect, send and act on data they acquire from their surrounding environments using embedded sensors, processors and communication hardware. What is the IoT? Everything you need to know about the Internet of Things right now.

Cloud IoT Edge - Extending Google Cloud's AI & ML Edge computing: The state of the next IT transformation. In recent years, computing workloads have been migrating: first from on-premises data centres to the cloud and now, increasingly, from cloud data centres to 'edge' locations where they are nearer the source of the data being processed.

The goal? To boost the performance and reliability of apps and services, and reduce cost of running them, by shortening the distance data has to travel, thereby mitigating bandwidth and latency issues. That's not to say that on-premises or cloud centres are dead -- some data will always need to be stored and processed in centralised locations. But digital infrastructures are certainly changing. According to Gartner, for example, 80 percent of enterprises will have shut down their traditional data centre by 2025, versus 10 percent in 2018. There are challenges involved in edge computing, of course -- notably centering around connectivity, which can be intermittent, or characterised by low bandwidth and/or high latency at the network edge. What is edge computing? What is edge computing and how it’s changing the network.

Edge computing allows data produced by internet of things (IoT) devices to be processed closer to where it is created instead of sending it across long routes to data centers or clouds. Doing this computing closer to the edge of the network lets organizations analyze important data in near real-time – a need of organizations across many industries, including manufacturing, health care, telecommunications and finance.

“In most scenarios, the presumption that everything will be in the cloud with a strong and stable fat pipe between the cloud and the edge device – that’s just not realistic,” says Helder Antunes, senior director of corporate strategic innovation at Cisco.