RDFa 1.1 Distiller and Parser Warning: This version implements RDFa 1.1 Core, including the handling of the Role Attribute. The distiller can also run in XHTML+RDFa 1.0 mode (if the incoming XHTML content uses the RDFa 1.0 DTD and/or sets the version attribute). The package available for download, although it may be slightly out of sync with the code running this service. If you intend to use this service regularly on large scale, consider downloading the package and use it locally. Storing a (conceptually) “cached” version of the generated RDF, instead of referring to the live service, might also be an alternative to consider in trying to avoid overloading this server…

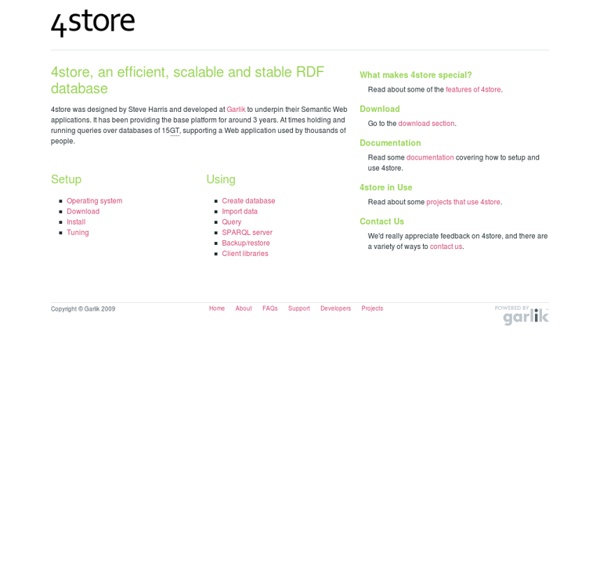

Putting WorldCat Data Into A Triple Store By Richard Wallis on August 21, 2012 - 14 Comments Published in Consuming Data, Data Publishing, Libraries, Linked Data, OCLCTagged: 4Store, Linked Data, OCLC, RDF, SPARQL, WorldCat I can not really get away with making a statement like “Better still, download and install a triplestore [such as 4Store], load up the approximately 80 million triples and practice some SPARQL on them” and then not following it up. I made it in my previous post Get Yourself a Linked Data Piece of WorldCat to Play With in which I was highlighting the release of a download file containing RDF descriptions of the 1.2 million most highly held resources in WorldCat.org – to make the cut, a resource had to be held by more than 250 libraries.

Neo4j Sparql Plugin v0.2-SNAPSHOT Insert quads This endpoint enables the insertion of quads into the Neo4j Server. Figure 1. Final Graph POST application/jsonContent-Type: application/json rdf4h-0.9.1 'RDF for Haskell' is a library for working with RDF in Haskell. At present it includes parsers and serializers for RDF in the N-Triples and Turtle, and parsing support for RDF/XML. It provides abilities such as querying for triples containing a particular subject, predicate, or object, or selecting triples that satisfy an arbitrary predicate function. No changelog available Properties Learn about SPARQL 1.1 David Beckett This presentation is personal opinion. I am not speaking on behalf of my employer, Digg Inc.. The slides are presented via S5 Redland librdf C libraries — Raptor, Rasqal and utilities rapper, roqet, rdfprocPlanet RDFTriplr — a simple HTTP based RDF syntax and query service.I invented the recursive acronym: SPARQL Protocol and RDF Query Language.Digg is where I work but I am not doing any RDF or SPARQL work there

RDF Graph Security in Virtuoso Controlling access to Named Graphs and Graph Groups is critical to constructing a security matrix for any DBMS. This article describes how to do so in Virtuoso. RDF Graph Groups In some cases, the data-set of a SPARQL query is not known at compile time. It is possible to pass IRIs of source graphs via parameters, but the method is not perfect. RelFinder - Visual Data Web Are you interested in how things are related with each other? The RelFinder helps to get an overview: It extracts and visualizes relationships between given objects in RDF data and makes these relationships interactively explorable. Highlighting and filtering features support visual analysis both on a global and detailed level. The RelFinder is based on the open source framework Adobe Flex, easy-to-use and works with any RDF dataset that provides standardized SPARQL access.

SPARQL — Personal Wiki In Semantic Web, SPARQL is a query language for getting information from RDF graphs SPARQL = SPARQL Protocol and RDF Query Language matches graph patterns - so also a graph matching language it's a variant of Turtle adapted for querying variables denoted by ? Formal foundation Versions Getting started using Virtuoso as a triplestore Just about all the RDF triplestores I've been trying were designed from the ground up to store RDF triples. OpenLink Software's Virtuoso is a database server that can also store (and, as part of its original specialty, serve as an efficient interface to databases of) relational data and XML, so some of my setup and usage steps required learning a few other aspects of it first. For example, the actual loading of RDF is done using Virtuoso's WebDAV support, so I had to learn a bit about that. At first this seemed like another obstacle along the way to my goal of loading RDF and then issuing SPARQL queries against it, but I reminded myself that in a fast, free database server that supports a variety of data models, WebDAV support is most certainly a feature, not a bug.

SPARQL tips and tricks This section describes SPARQL patterns that are frequently used for managing, understanding, and analyzing data. For example, this topic provides details about how to delete all data associated with an object and how to perform a cascaded delete. It also provides tips for understanding your data as a graph by analyzing social networks to find the most connected people and the size of their network out to one or two degrees. Tools The OpenUp Client makes use of several features of OpenUp that we're working on, including the Data Enrichment Service (DES) and the RDF store. We wanted to experiment with things like web page extraction, what CORS can give you and performance of an application such as this. So, for now, it is just an experiment! The client is a bookmarklet that can be executed against any web page. The contents of the page is sent to the DES and information is extracted from the document.

Managing RDF Using Named Graphs In this post I want to put down some thoughts around using named graphs to manage and query RDF datasets. This thinking is prompted is in large part by thinking how best to use Named Graphs to support publishing of Linked Data, but also most recently by the first Working Drafts drafts of SPARQL 1.1. While the notion of named graphs for RDF has been around for many years now, the closest they have come to being standardised as a feature is through the SPARQL 1.0 specification which refers to named graphs in its specification of the dataset for a SPARQL query. SPARQL 1.1 expands on this, explaining how named graphs may be used in SPARQL Update, and also as part of the new Uniform HTTP Protocol for Managing RDF Graphs document. Named graphs are an increasingly important feature of RDF triplestores and are very relevant to the notion of publishing Linked Data, so their use and specification does bear some additional analysis.

A Narrative for Understanding SPARQL’s: FROM, FROM NAMED and GRAPH When I first began learning SPARQL, I was fortunate enough not to have to try and tackle the named graphs concept of RDF and SPARQL at the same time. My queries were fully limited to the default graph and I had some time to learn queries in SPARQL before attempting to move on. In fact, in those days there were very limited educational resources on either RDF or SPARQL, and I learned mostly by example. But then I had need to tackle named graphs and I can recall just how difficult it was to gain an understanding of how the SPARQL constructs for working with named graphs work.