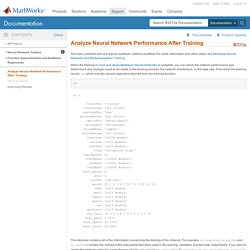

Google. Google. Analyze Neural Network Performance After Training. When the training in Train and Apply Multilayer Neural Networks is complete, you can check the network performance and determine if any changes need to be made to the training process, the network architecture, or the data sets.

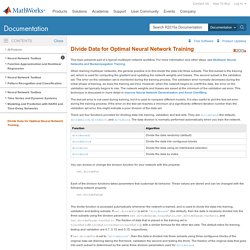

First check the training record, tr, which was the second argument returned from the training function. This structure contains all of the information concerning the training of the network. For example, tr.trainInd, tr.valInd and tr.testInd contain the indices of the data points that were used in the training, validation and test sets, respectively. Training, testing and validating data set in Neura... - Newsreader - MATLAB Central. Divide Data for Optimal Neural Network Training. When training multilayer networks, the general practice is to first divide the data into three subsets.

The first subset is the training set, which is used for computing the gradient and updating the network weights and biases. 【求助】MATLAB中BP神经网络的训练算法具体是怎么样的? - 仿真模拟. Artificial intelligence - whats is the difference between train, validation and test set, in neural networks? Matlab neural network toolbox - get errors of the test data during training process. Divide targets into three sets using random indices - MATLAB dividerand. Divide targets into three sets using blocks of indices - MATLAB divideblock. [問題] 請問neural network中的testing set - 看板 MATLAB. How to train neural networks on big sample sets in Matlab? Fundamental difference between feed-forward neural networks and recurrent neural networks?

I've often read, that there are fundamental differences between feed-forward and recurrent neural networks (RNNs), due to the lack of an internal state and a therefore short-term memory in ... Train Neural Networks on a Data of type Table I loaded a huge CSV file of 2000 instances with numeric values and limited text values(some attributes have values like 'Yes' 'No' 'Maybe'. The above data was imported using readtable in Matlab. I ... Deep Belief Networks vs Convolutional Neural Networks I am new to the field of neural networks and I would like to know the difference between Deep Belief Networks and Convolutional Networks.

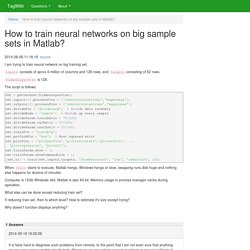

Setting input data division ratio - MATLAB Answers - MATLAB Central. Hi Hoda, Let me paste an example of a simple 2-layer Feed-Forward network, to see if this works for you (you should be able to reproduce with the same dataset -cancer_dataset.mat-, it comes with the NN toolbox): load cancer_dataset; % 2 neurons in the first layer (tansig) and 1 neuron in the second layer % (purelin). % Levenberg-Maquardt Backpropagation Method is used mlp_net = newff(cancerInputs,cancerTargets,2,{'tansig'},'trainlm');

请问训练集和测试集如何建立 - MATLAB 基础讨论 - MATLAB中文论坛 - Powered by Discuz! 考拉BP神经网络的matlab实现学习历程(五)—bp神经网络进行交通预测程序优化. 注意:dividevec()函数在7.6版本还可以使用 把数据重新打乱顺序,进行输入,可以让数据更加具备典型性和更优良的泛化能力!

把数据进行打乱,并分类为:训练输入数据、变量数据、测试数据的方法 我用百度搜了一下,发现有些方法,但居然很少看到使用matlab内部函数直接进行的,其实matlab自带的内部函数dividevec,完全能胜任上述工作,推荐! 但这个存在一个问题是,因为打乱了,最后分析结果的时候,数据重新排列困难,因为丢失了数据在数组中的位置参数。 具体用法可以参见下面我的bp交通预测的例子。 因为我用的7.0版本,Neural Network Toolbox Version 5.0.2 (R2007a) 昨天,我去mathworks公司查看了一下nnet的新手册,上述问题得到了解决,里面视乎没有介绍dividverc这个函数了,但增加了新的函数来完成上述功能,并返回标号(手头没装新版本Neural Network Toolbox Version 6.0 (R2008a)),看guide大概是这个意思(有新版本的,可以试一下,这个函数是不是这个意思): divideblock,divideind,divideint和dividerand 上述函数,用法和功能基本相同,只是打乱的方法不一样,分别是block方法抽取、按数组标号自定义抽取、交错索引抽取和随机抽。 下面以divideblock为例,讲解其基本用法: [trainV,valV,testV,trainInd,valInd,testInd] =divideblock(allV,trainRatio,valRatio,testRatio)[训练数据,变量数据,测试数据,训练数据矩阵的标号,,变量数据标号,测试数据标号] =divideblock(所有数据,训练数据百分比,变量数据百分比,测试数据百分比) 复制代码 其实dividevec和后面四个分类函数的区别在于,dividevec一般直接在Matlab代码中调用。 而后面四个函数是通过设置网络的divideFcn函数来实现,比如,net.divideFcn='divideblock',但不是说不可以在代码中像dividevec直接调用. Improve Neural Network Generalization and Avoid Overfitting.

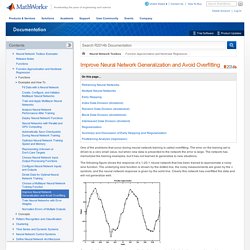

One of the problems that occur during neural network training is called overfitting.

The error on the training set is driven to a very small value, but when new data is presented to the network the error is large. The network has memorized the training examples, but it has not learned to generalize to new situations. The following figure shows the response of a 1-20-1 neural network that has been trained to approximate a noisy sine function.